On 14 of March 2022, the OSCE Academy in Bishkek e-hosted the guest lecture by Mr. Odhran McCarthy, Programme Officer at the United Nations Interregional Crime and Justice Research Institute (UNICRI) titled ‘Threats, opportunities and challenges of AI counter terrorism.’ This lecture was a part of the course ‘Contemporary Security Issues’ taught by Dr Elena Zhirukhina within MA Programme in Politics and Security.

Mr. McCarthy mapped the landscape of the UNICRI’s project portfolios and focused on the research of new and emerging technologies, in particular artificial intelligence (AI). AI has an enormous potential to develop. Its estimated market growth is likely to reach several trillion euros by 2025. By recalling the quote from the UN Secretary-General Antonio Guterres ‘Artificial intelligence is a game changer that can boost development and transform lives in spectacular fashion. But it may also have a dramatic impact on labour markets and, indeed, on global security and the very fabric of societies’, Mr. McCarthy highlighted the duality of AI’s application, also evident from the history of exploiting new technologies by various legitimate and illegitimate actors.

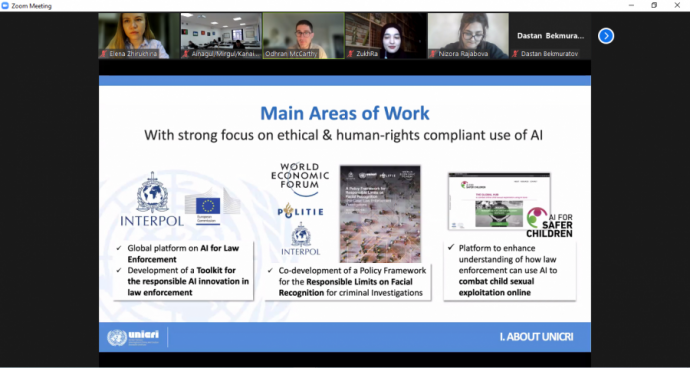

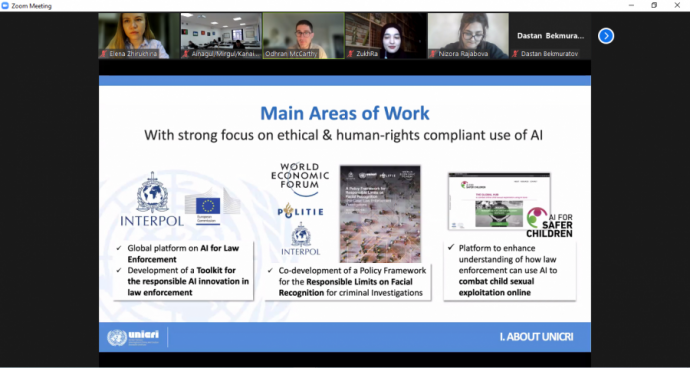

With that in mind, the UNICRI in partnership with the UN Counter-Terrorism Centre at the UN Office on Counter-Terrorism explored the use of AI by law enforcement in the counterterrorist context as well as malicious use and the abuse of AI for terrorist purposes by violent non-state actors.

The joint report on countering terrorism online with AI (that can be found here) proposed several trajectories of how AI can be used to advance law enforcement work in countering terrorism. For instance, the predictive analytics for terrorist activities has a potential to identify terrorist attacks before their occur by analysing real-time online data reflecting behaviour of groups or individuals at risk. Predictive analytics is ethically, legally and technically problematic at an individual level, however at a higher and collective level some studies have explored how it can be used to capture trends and forecasting future behaviour of terrorist groups rather than individuals. Another example highlighted by Mr. McCarthy is the potential to identify red flags of radicalization. AI can assist in finding individuals at risk of radicalization in digital environments and enabling the use of targeted interventions. Acknowledging that there is no single path to radicalisation, AI-powered technology can play a role signalling consumption of or search for terrorist materials and the use of keywords indicative of possible radicalisation or vulnerability to terrorist narratives.

The joint report on the malicious use of AI for terrorist purposes (that can be found here) analysed the potential application of AI technology by violent non-state actors such as terrorist groups. The report identified areas of higher alert for law enforcement agencies to prepare for future malicious use of AI in advancing terrorist’s cyber capabilities to conduct distributed denial-of-service (DDoS) attacks, spread malware and ransomware, or hack into systems by more efficient password guessing and CAPTCHA breaking. AI powered technology also opens a window of opportunity for implementing physical attacks by misusing various autonomous vehicles, including drones with facial recognition. A separate matter of concern, mentioned Mr. McCarthy, is the use of propaganda, misinformation and disinformation for terrorist purposes enhanced by deepfakes and manipulation of the content.

Mr. McCarthy concluded that to embrace the full potential of AI for good, to further secure our societies, the international community has still a long way to go in debating ethical matters and ensuring compliance with human rights regimes, as well as designing appropriate policies and legal frameworks. This massive undertaking requires cooperation and coordination of multiple stakeholders at many levels from the grass-root to the international.